Boosting refers to a family of algorithms which converts weak learners to strong learners. How does it happen?

-

No weak learner becomes strong; it is the *ensemble* of the weak learners that turns out to be strong. – desertnaut Sep 26 '20 at 23:41

-

An answer would be too long. So here's a rough idea, when we talk about learning we have certain gurantees on the error of a leaner. So a weak learner has a very bad gurantee of error. But when we combine many such weak learners (trained sequentially) each focussing on only approximating certain parts of the data domain (determined by its predecessors), it slowly gets to determine the weak learners which has the lowest error (and thus the most likely best solution) and weighs it the highest, and weighs the poorest solution the lowest while making classification. – Sep 27 '20 at 10:55

-

There are some theoretical gurantees and proofs to this effect. – Sep 27 '20 at 10:58

3 Answers

As @desertnaut mentioned in the comment

No weak learner becomes strong; it is the ensemble of the weak learners that turns out to be strong

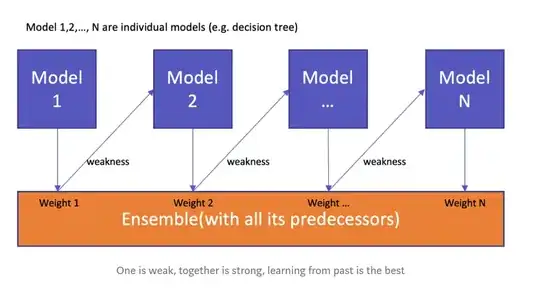

Boosting is an ensemble method that integrates multiple models(called as weak learners) to produce a supermodel (Strong learner).

Basically boosting is to train weak learners sequentially, each trying to correct its predecessor. For boosting, we need to specify a weak model (e.g. regression, shallow decision trees, etc.), and then we try to improve each weak learner to learn something from the data.

AdaBoost is a boosting algorithm where a decision tree with a single split is used as a weak learner. Also, we have gradient boosting and XG boosting.

- 316

- 1

- 10

-

-

we try to improve each weak learner to learn something from the data – Sivaram Rasathurai Sep 29 '20 at 02:32

You take a bunch of weak learners, each of them trained on a subset of the data.

You then just get all of them to make a prediction, and you learn how much you can trust each one, resulting in a weighted vote or other type of combination of the individual predictions.

- 615

- 3

- 9

In Boosting, we improve the overall metrics of the model by sequentially building weak models and then building upon the weak metrics of previous models.

We start out by applying basic non-specific algorithms to the problem, which returns some weak prediction functions by taking arbitrary solutions (like sparse weights or assigning equal weights/attention). We improve upon this in the following predictions by adjusting weights to those having a higher error rate. After going through many iterations, we combine it to create a single Strong Prediction Function which has better metrics.

Some popular Boosting Algorithms :

- AdaBoost

- Gradient Tree Boosting

- XGBoost

- 756

- 1

- 7

- 20

-

What are "weak prediction functions" and "Strong Prediction Function"? These terms are ambiguous because you did not define what you mean by "weak" and "strong" and you also have not defined "prediction function". Also, what do you mean by "weak metrics"? Do you mean metrics according to which the model performs bad? – nbro Sep 27 '20 at 10:35