All else being equal, including total neuron count, I give the following definitions:

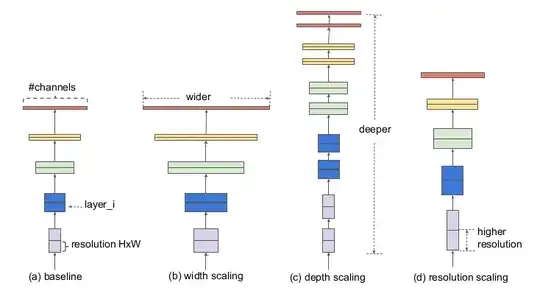

- wide is a parallel ensemble, where good chunks of the neurons have the same inputs because the inputs are shared and they have different outputs.

- deep is a series ensemble, where for the most part neurons have as input the output of other neurons and few inputs are shared.

For CART ensembles the parallel (wide) ensemble is a random forest while the series (deep) ensemble is a gradient boosted machine. For several years the GBM was the "winningest" on kaggle.

Is there a parallel of that applied to Neural networks? Is there some reasonable measure that indicates whether deep outperforms wide when it comes to neural networks? If I had the same count of weights to throw at a tough problem, all else being equal should they be applied more strongly in parallel or in series?