The system I'm trying to implement is a microcontroller with a connected microphone which have to recognise single words. the feature extraction is done using MFCC (and is working).

- the system have to recognise [predefined, up to 20] single words each one up to 1 seconds length

- input audio is sampled with a frequency of 10KHz and 8 bits resolution

- the window is 256 sample wide (25.6 ms), hann windowed with a 15ms step (overlaying windows)

- the total MFCC features representing each window, is about 18 features

I've done the above things, and tested the outputs for accuracy and computation speed so there is not much concern about the computations. now I have to implement a HMM for word recognition. I've read about the HMM and I think these parameters need to be addressed:

- the hidden states are the "actual" pieces of the word with 25.6ms length

represented in 18 MFCC features. and they count up to maximum of 64 sets in a single word (because the maximum length for input word is 1sec and each window is (25.6 - 10)millisecs) - I should use Viterbi algorithm to find out the most probable word spoken untill the current state. so, if the user is saying "STOP", the Viterbi can suggest it (with proper learning of course) when the user has spoken "STO.." . so it's some kind of prediction too.

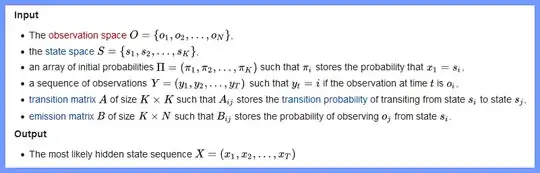

- I have to determine the other HMM parameters like the emission and transition. the wikipedia page for Viterbi which has written the algorithm, shows the input/output as:

from the above:

- what is observation space? the user may talk anything so it seems indefinite to me

- the state space is obviously the set containing all the possible

MFCC feature setsused in the learned word set. how I learn or hardcode that ?

thanks for reading this long question patiently.