I'm working on reimplementing the MuZero paper. In the description of the MCTS (page 12), they indicate that a new node with associated state $s$ is to be initialized with $Q(s,a) = 0$, $N(s,a) = 0$ and $P(s,a) = p_a$. From this, I understand that the root node with state $s_0$ will have edges with zero visits each, zero value and policy evaluated on $s_0$ by the prediction network.

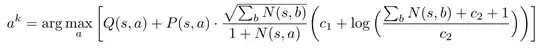

So far so good. Then they explain how actions are selected, according to the equation (also on page 12):

But for the very first action (from the root node) this will give a vector of zeros as argument to the argmax: $Q(s_0,a) = 0$ and $\sum_bN(s_0,b)=0$, so even though $P(s_0,a)$ is not zero, it will be multiplied by a zero weight.

Surely there is a mistake somewhere? Or is it that the very first action is uniformly random?