I've been trying to find the optimal number of epochs that I should train my neural network (that I just implemented) for.

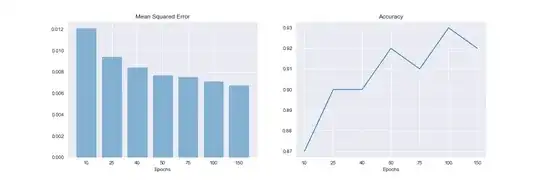

The visualizations below show the neural network being run with a variable number of epochs. It is quite obvious that the accuracy increases with the number of epochs. However, at 75 epochs, we see a dip before the accuracy continues to rise. What is the cause of this?