Variational autoencoders have two components in their loss function. The first component is the reconstruction loss, which for image data, is the pixel-wise difference between the input image and output image. The second component is the Kullback–Leibler divergence which is introduced in order to make image encodings in the latent space more 'smooth'. Here is the loss function:

\begin{align} \text { loss } &= \|x-\hat{x}\|^{2}+\operatorname{KL}\left[N\left(\mu_{x}, \sigma_{x}\right), \mathrm{N}(0,1)\right] \\ &= \|x-\mathrm{d}(z)\|^{2}+\operatorname{KL}\left[N\left(\mu_{x^{\prime}} \sigma_{x}\right), \mathrm{N}(0,1)\right] \end{align}

I am running some experiments on a dataset of famous artworks using Variational Autoencoders. My question concerns scaling the two components of the loss function in order to manipulate the training procedure to achieve better results.

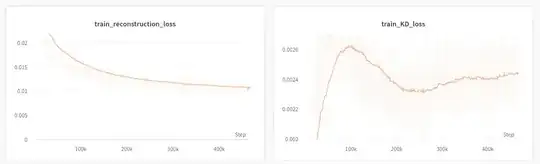

I present two scenarios. The first scenario does not scale the loss components.

Here you can see the two components of the loss function. Observe that the order of magnitude of the Kullback–Leibler divergence is significantly smaller than that of the reconstruction loss. Also observe that 'my famous' paintings have become unrecognisable. The image shows the reconstructions of the input data.

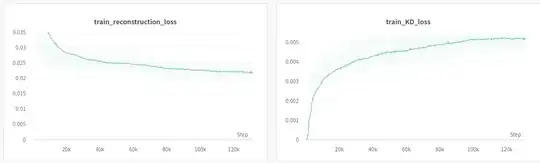

In the second scenario I have scaled the KL term with 0.1. Now we can see that the reconstructions are looking much better.

Question

Is it mathematically sound to train the network by scaling the components of the loss function? Or am I effectively excluding the KL term in the optimisation?

How to understand this in terms of gradient descent?

Is it fair to say that we are telling the model "we care more about the image reconstructions than 'smoothing' the latent space"?

I am confident that my network design (convolutional layers, latent vector size) have the capacity to learn parameters to create proper reconstructions as a Convolutional Autoencoder with the same parameters is able to reconstruct perfectly.

Here is a similar question.

Image Reference: https://towardsdatascience.com/understanding-variational-autoencoders-vaes-f70510919f73