I recently came across the featuretools package, which facilitates automated feature engineering. Here's an explanation of the package:

https://towardsdatascience.com/automated-feature-engineering-in-python-99baf11cc219

Automated feature engineering aims to help the data scientist by automatically creating many candidate features out of a dataset from which the best can be selected and used for training.

I only have limited experience with ML/AI techniques, but general AI is something that I'd been thinking about for a while before exploring existing ML techniques. One idea that kept popping up was the idea of analyzing not just raw data for patterns but derivatives of data, not unlike what featuretools can do. Here's an example:

It's not especially difficult to see the figure above as two squares, one that is entirely green and one with a blue/green horizontal gradient. This is true despite the fact that the gradient square is not any one color and its edge is the same color as the green square (i.e., there is no hard boundary).

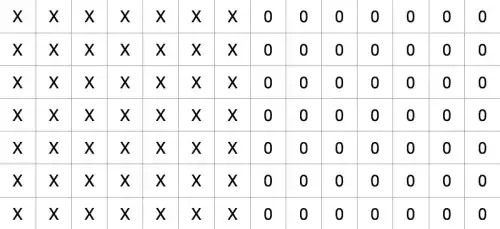

However, let's say that we calculate the difference between each pixel and the pixel to its immediate left. Ignoring for a moment that RGB is 3 separate values, let's call the difference between each pixel column in the gradient square X. The original figure is then transformed into this, essentially two homogenous blocks of values. We could take it one step further to identify a hard boundary (applying a similar left-to-right transformation again) between the two squares.

Once a transformation is performed, there should be some way to assess the significance of the transformation output. This is a simple and clean example where there are two blocks of homogenous values (i.e., the output is clearly not random). If it's true that our minds use any kind of similar transformation process, the number of transformations that we perform would likely be practically countless, even in brief instances of perception.

Ultimately, this transformation process could facilitate finding the existence of order in data. Within this framework, perhaps "intelligence" could be defined simply as the ability to detect order, which could require applying many transformations in a row, a wide variety of types of transformations, an ability to apply transformations with a high probability of finding something significant, an ability to assess significance, etc.

Just curious if anyone has thoughts on this, if there are similar ideas out there beyond simple automated feature engineering, etc.