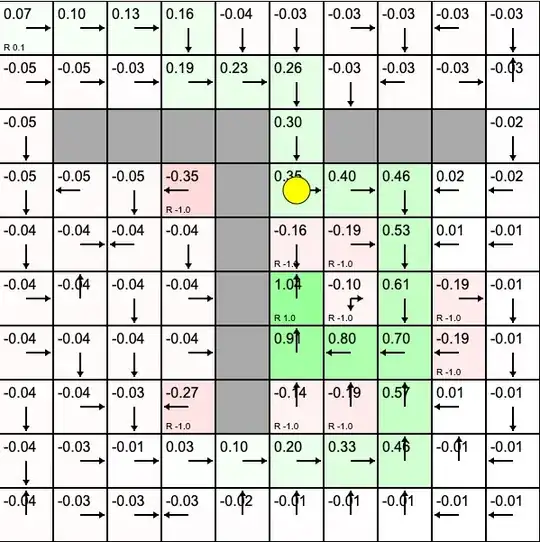

I am trying to implement the basic RL algorithms to learn on this 10x10 GridWorld (from REINFORCEJS by Kaparthy).

Currently I am stuck at TD(0). No matter how many episodes I run, when I am updating the policy after all episodes are done according to the value function I dont get the optimal value function which I obtain when toggle td learning on the grid from the link I provided above.

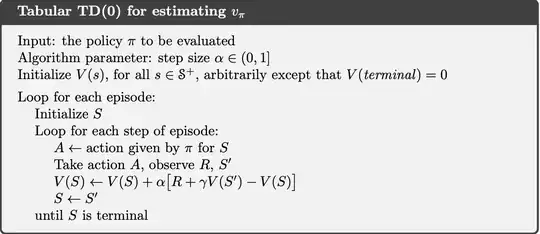

The only way I am getting the optimal policy is when I am updating the policy in each iteration and then following the updated policy when calculating the TD target. But according to the algorithm from Sutton and Barto a given policy (which is fixed over all episodes - see line 1 below) should be evaluated.

Using alpha=0.1 and gamma=0.9 after 1000 episodes my Td(0) algo finds the following value function

[[-0.04 -0.03 -0.03 -0.05 -0.08 -0.11 -0.09 -0.06 -0.05 -0.07]

[-0.08 -0.04 -0.04 -0.06 -0.1 -0.23 -0.11 -0.06 -0.07 -0.11]

[-0.13 -inf -inf -inf -inf -0.58 -inf -inf -inf -0.25]

[-0.24 -0.52 -1.23 -2.6 -inf -1.4 -1.28 -1.12 -0.95 -0.62]

[-0.28 -0.49 -0.87 -1.28 -inf -2.14 -2.63 -1.65 -1.38 -1.04]

[-0.27 -0.42 -0.64 -0.94 -inf 0.97 -1.67 -2.01 -2.79 -1.62]

[-0.26 -0.36 -0.69 -0.93 -inf -1.17 -1.72 -1.92 -2.75 -1.82]

[-0.25 -0.38 -0.67 -2.27 -inf -2.62 -2.74 -1.55 -1.31 -1.14]

[-0.23 -0.31 -0.66 -1.2 -0.98 -1.24 -1.48 -1.02 -0.7 -0.7 ]

[-0.2 -0.29 -0.43 -0.62 -0.64 -0.77 -0.87 -0.67 -0.54 -0.48]]

where -infare walls in the grid. If I update the policy according to that value function I am getting.

[['e ' 'e ' 'w ' 'w ' 'w ' 'w ' 'e ' 'e ' 's ' 'w ']

['e ' 'n ' 'n ' 'w ' 'w ' 'e ' 'e ' 'e ' 'n ' 'w ']

['n ' 'XXXX' 'XXXX' 'XXXX' 'XXXX' 'n ' 'XXXX' 'XXXX' 'XXXX' 'n ']

['n ' 'w ' 'w ' 's ' 'XXXX' 'n ' 'e ' 'e ' 'e ' 'n ']

['n ' 'w ' 'w ' 'w ' 'XXXX' 's ' 'n ' 'n ' 'n ' 'n ']

['s ' 'w ' 'w ' 'w ' 'XXXX' 's ' 'w ' 'n ' 'n ' 'n ']

['s ' 'w ' 'w ' 'w ' 'XXXX' 'n ' 'w ' 's ' 's ' 's ']

['s ' 'w ' 'w ' 'w ' 'XXXX' 'n ' 'e ' 's ' 's ' 's ']

['s ' 'w ' 'w ' 's ' 's ' 's ' 's ' 'e ' 's ' 's ']

['n ' 'w ' 'w ' 'w ' 'w ' 'w ' 'e ' 'e ' 'e ' 'w ']]

where (n, w, s, e) = (north, west, south, east). According to the result from Andrey Kaparthys simulation (from here) the final policy should look like this

Notes:

- I did not use any exploration

- when the agent ends up in the final state

[5, 5]I used the value of the starting state[0, 0]as the value of its successor stateV(S_{t+1}). The episode is then finished and the agent starts again in the starting state. - In every state the agent takes a random action taken from north, west, south or east. If he ends in a wall the value of the next state is just the value where the agent currently is in. And it stays in its state and takes a random action again.

I am scratching my head on this for a while now but I dont understand what I am missing.

- The value function has to converge. Meaning my policy should be the same as on the website (picture 2)?

- Only the value of my final state is positive while on the website simulation the whole optimal trajectory has positive values. I know that this is because on the website they update the policy in every step. But shouldn't it also work without updating it iteratively like I did it?

- Since I am taking a random action (from

n,w,s,e) in every step in every episode for example state[6, 5]or[6, 6](the one below the terminal state) can not really take advantage of the positivity of the terminal state since they are surrounded by more negative-reward-states than this positive-reward-state. This is why after so many iterations the values are getting negative.

I appreciate any help. Thanks in advance.