I'm using tf.Keras to build a deep-fully connected autoencoder. My input dataset is a dataframe with shape (19947,), and the purpose of the autoencoder is to predict normalized gene expression values. They are continuous values that range from [0,~620000].

I tried different architectures and I'm using relu activation for all layers. To optimize I'm using adam with mae loss.

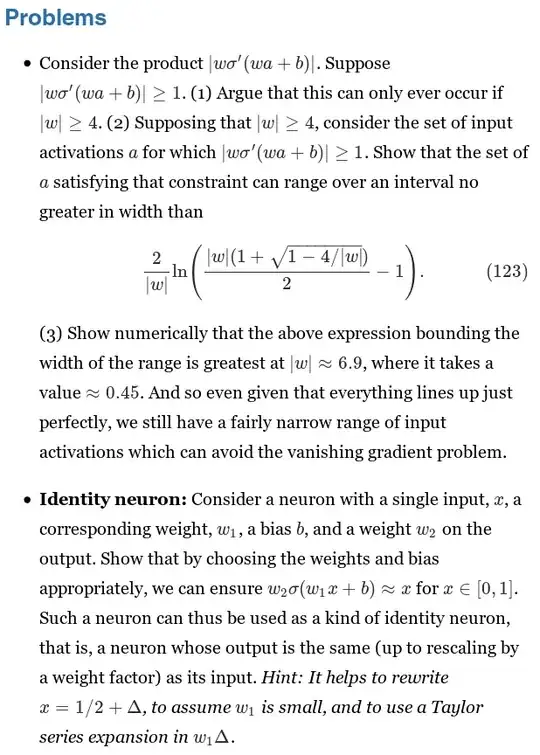

The problem I have is the network trains successfully (although the train loss is still terrible) but when I'm predicting I notice that although the predictions do make sense for some nodes, there are always a certain number of nodes that only output 0. I've tried changing the number of nodes of my bottleneck layer (output) and it always happens even when I decrease the output number.

Any ideas on what I'm doing wrong?

tf.Keras code:

input_layer = keras.Input(shape=(19947,))

simple_encoder = keras.models.Sequential([

input_layer,

keras.layers.Dense(512, activation='relu'),

keras.layers.Dense(128, activation='relu'),

keras.layers.Dense(16, activation='relu')

])

simple_decoder = keras.models.Sequential([

keras.layers.Dense(128, activation='relu'),

keras.layers.Dense(512, activation='relu'),

keras.layers.Dense(19947, activation='relu')

])

simple_ae = keras.models.Sequential([simple_encoder, simple_decoder])

simple_ae.compile(optimizer='adam', loss='mae')

simple_ae.fit(X_train, X_train,

epochs=1000,

validation_data=(X_valid, X_valid),

callbacks=[early_stopping])

Output of encoder.predict with 16 nodes on the bottleneck layer. 7 nodes predict only 0's and 8 nodes predict "correctly"