In the paper On the Variance of the Adaptive Learning Rate and Beyond, in section 2, the authors write

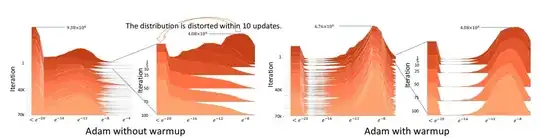

To further analyze this phenomenon, we visualize the histogram of the absolute value of gradients on a log scale in Figure 2. We observe that, without applying warmup, the gradient distribution is distorted to have a mass center in relatively small values within 10 updates. Such gradient distortion means that the vanilla Adam is trapped in bad/suspicious local optima after the first few updates.

Here is figure 2 from the paper.

Can someone explain this part?

Such gradient distortion means that the vanilla Adam is trapped in bad/suspicious local optima after the first few updates.

Why is this true?