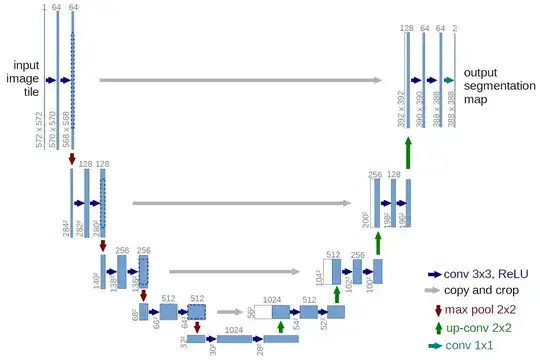

I was going through the paper on U-Net. U-net consists of a contracting path followed by an expanding path. Both the paths use a regular convolutional layer. I understand the use of convolutional layers in the contracting path, but I can't figure out the use of convolutional layers in the expansive path. Note that I'm not asking about the transpose convolutions, but the regular convolutions in the expansive path.

Asked

Active

Viewed 202 times

1 Answers

3

The point is that in the expansive path you have two forms of information:

- the information from the contracting path, which includes all high-level features extracted from the original image.

- the information from the skip-connections, which copy a cropped version of the feature maps in the contracting path. Because, as we go forward through the expansive path these originate from earlier stages in the contracting path, these are progressively richer in detail.

Intuitively you can think of it as this: high-level features help the network tell which areas to group together, while details help it tell where each group starts and ends at the pixel level.

The idea is to combine these two forms of information, i.e. the high-level features and the details, optimally. To do this you need trainable layers that learn this optimal combination. Here is where the convolution layers come to play.

Djib2011

- 3,163

- 3

- 16

- 21

-

2It may be worth mentioning that in the expansive path we do both convolution and transpose convolution (and that's one case where people may get confused). – nbro Jan 06 '21 at 12:07

-

I know that convolutional layers extract features in the form of a feature map. The feature map stores the spatial location of different features(like edge, circle, etc.). Do convolution layers also combine the features? – Bhuwan Bhatt Jan 06 '21 at 16:31

-

@BhuwanBhatt they combine the feature maps, which are in the *channels* dimensions. – Djib2011 Jan 06 '21 at 18:49