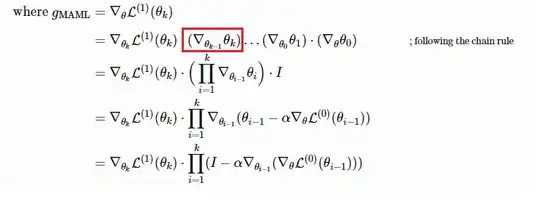

I am attempting to fully understand the explicit derivation and computation of the Hessian and how it is used in MAML. I came across this blog: https://lilianweng.github.io/lil-log/2018/11/30/meta-learning.html.

Specifically, could someone help to clarify this for me: is this term in the red box literally interpreted as the gradient at $\theta_{k-1}$ multiplied by the $\theta_k$?