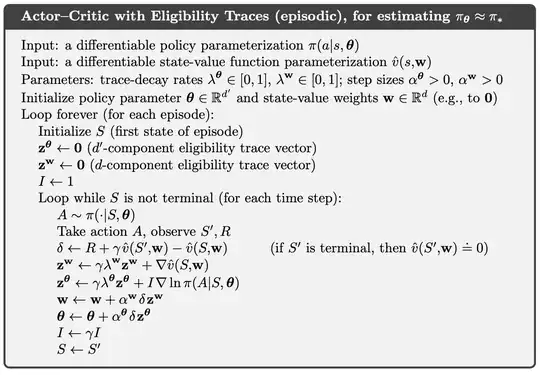

The pseudocode below is taken from Barto and Sutton's "Reinforcement Learning: an introduction". It shows an actor-critic implementation with eligibility traces. My question is: if I set $\lambda^{\theta}=1$ and replace $\delta$ with the immediate reward $R_t$, do I get a backwards implementation of REINFORCE?

Asked

Active

Viewed 219 times

3

nbro

- 39,006

- 12

- 98

- 176

-

Well, I think, if you set $\lambda^{\theta}$ to 1, there will be no sense in eligibility traces. In this case you are going to update $\theta$ directly based on policy gradient multiplied by TD-error $\delta$ – Georgy Firsov Jan 20 '21 at 14:33

-

Well, not quite. $z^{\theta}$ will still hold $\nabla \pi(A|S, \theta)$ from previous steps, and will decay them based on $\gamma$. That means past updates will be credited in a decaying manner going forward. Since ET implement a backward view of the $\lambda$-return, and setting $\gamma=1$ there should result in MC updates. Is there anything wrong in this reasoning? – Javier Ventajas Hernández Jan 20 '21 at 15:04

-

Now that I think about it, setting both $\lambda^{\theta}=1$ and $\lambda^w=1$ should make this method become REINFORCE with baseline, shouldn't it? – Javier Ventajas Hernández Jan 20 '21 at 15:07