I'm not sure if I understood your question correctly, but here's my take anyway.

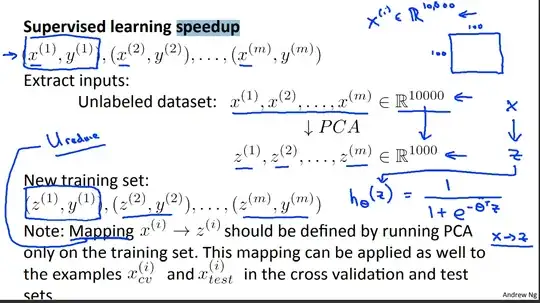

So, PCA is a technique that you can apply to data to reduce the number of features.

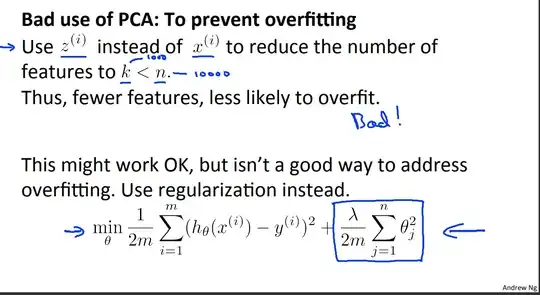

In return, (i) this can speed-up training, as there are less features to do computation with, (ii) and can prevent overfitting, as you lose some information on your data.

To detect overfitting, you usually monitor the validation and training losses during the training. If your training loss decreases, but your validation loss stays constant or increases, it's likely that your model is overfitting on the training data. In practice, this means your model generalizes worse and you can observe this by measuring the test accuracy.

All in all, you can apply PCA, train a new model, and measure your model's test accuracy to see if PCA has successfully prevented overfitting. In case it didn't, you can re-train with other regularization techniques such as weight decay and so on.

After your edit that put the slides

Basically, what slides claim is, PCA could be a bad way to prevent overfitting when compared to using standard regularization methods. To actually see whether this is the case, the standard way would be measuring your model's performance on a validation dataset. So, if PCA throws away lots of information, and hence causes your model to underfit, your validation accuracy should rather be poor wrt to using standard regularization techniques.