I'm working my way through the Bayesian world. So far, I've understood that the MLE or the MPA are point estimates, therefore using such models just output one specific value and not a distribution.

Moreover, vanilla neuronal networks do in fact s.th. like MLE, because minimizing the squared-loss or the cross-entropy is similar to finding parameters that maximize the likelihood. Moreover, using neural networks with regularisation is comparable to the MAP estimates, as the prior works like the penalty term in error functions.

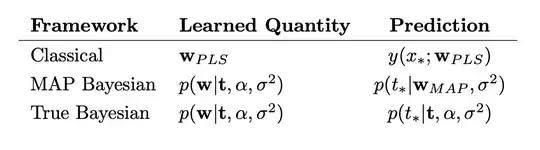

However, I've found this work. It shows that the weights $W_{PLS}$ gained from a penalized least-squared are the same as the weights $W_{MAP}$ gained through maximum a posteriori:

However, the paper says:

The first two approaches result in similar predictions, although the MAP Bayesian model does give a probability distribution for $t_*$ The mean of this distribution is the same as that of the classical predictor $y(x_*; W_{PLS})$, since $W_{PLS} = W_{MAP}$

What I don't get here is how can the MAP Bayesian give a proability distribution over $t_*$, when it is only a point estimate?

Consider a neuronal network - a point estimate would mean some fixed weights, so how can there be a output probability distribution? I thought that this is only achieved in the true Bayesian, where we integrate out the unknown weights, therefore building something like the weight averaged of all outcomes, using all possible weights.

Can you help me?