I am trying to train my model to classify 10 classes of hand gestures but I don't get why am I getting validation accuracy approx. double than training accuracy.

My dataset is from kaggle:

https://www.kaggle.com/gti-upm/leapgestrecog/version/1

My code for training model:

print(x.shape, y.shape)

# ((10000, 240, 320), (10000,))

# preprocessing

x_data = x/255

le = LabelEncoder()

y_data = le.fit_transform(y)

x_data = x_data.reshape(-1,240,320,1)

x_train,x_test,y_train,y_test = train_test_split(x_data,y_data,test_size=0.25,shuffle=True)

y_train = to_categorical(y_train)

y_test = to_categorical(y_test)

# Training

base_model = keras.applications.InceptionV3(input_tensor=Input(shape=(240,320,3)),

include_top=False,

weights='imagenet')

base_model.trainable = False

CLASSES = 10

input_tensor = Input(shape=(240,320,1) )

model = Sequential()

model.add(input_tensor)

model.add(Conv2D(3,(3,3),padding='same'))

model.add(base_model)

model.add(GlobalAveragePooling2D())

model.add(Dropout(0.4))

model.add(Dense(CLASSES, activation='softmax'))

model.compile(loss='categorical_crossentropy',

optimizer=optimizers.Adam(lr=1e-5), metrics=['accuracy'])

history = model.fit(

x_train,

y_train,

batch_size=64,

epochs=20,

validation_data=(x_test, y_test)

)

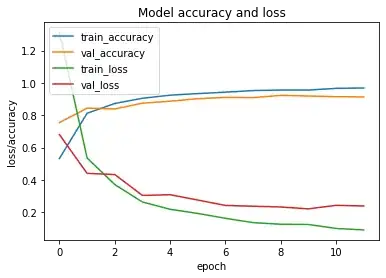

I am getting accuracy like:

Epoch 1/20

118/118 [==============================] - 117s 620ms/step - loss: 2.4571 - accuracy: 0.1020 - val_loss: 2.2566 - val_accuracy: 0.1640

Epoch 2/20

118/118 [==============================] - 70s 589ms/step - loss: 2.3253 - accuracy: 0.1324 - val_loss: 2.1569 - val_accuracy: 0.2512

I have tried removing the Dropout layer, changing train_test_split, but nothing works.

EDIT:

On changing the dataset to color images from https://www.kaggle.com/vbookshelf/v2-plant-seedlings-dataset , I am still getting higher validation accuracy in initial epochs, is it acceptable or I am doing something wrong?