I am reading this paper on image retrieval where the goal is to train a network that produces highly discriminative descriptors (aka embeddings) for input images. If you are familiar with facial recognition architectures, it is similar in that the network is trained with matching / non-matching pairs and triplet loss.

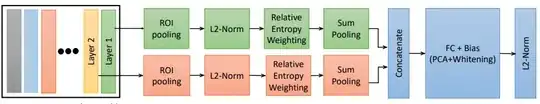

The paper discusses the use of PCA and whitening on the training set of descriptors as a means of further improving the discriminability (second to last block in image below, fig 1a of paper). This all make sense to me.

Where I'm confused is where they replace PCA/whitening with a trainable fully connected layer with bias. I do understand that PCA+whitening is just the composition of of two linear transformations (ie rotation + (un)squishing in each dimension) and that these are the same as having one linear transformation but:

- How is PCA+whitening equivalent to a learnable fully connected layer? Is there some theorem or paper explaining that training a fully connected layer with triplet loss is somehow statistically equivalent to PCA and whitening?

- Why is there a bias?