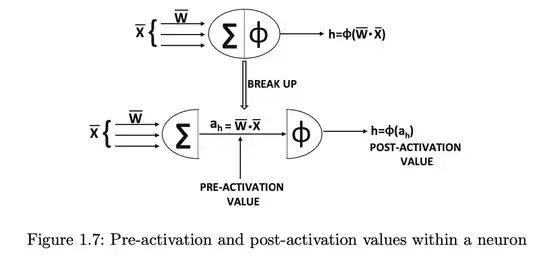

I am currently studying the textbook Neural Networks and Deep Learning by Charu C. Aggarwal. Chapter 1.2.1.3 Choice of Activation and Loss Functions presents the following figure:

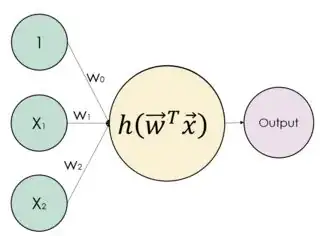

$\overline{X}$ is the features, $\overline{W}$ is the weights, and $\phi$ is the activation function.

So this is a perceptron (which is a form of artificial neuron).

But where does the so-called 'loss' / 'loss function' fit into this? This is something that I've been unable to reconcile.

EDIT

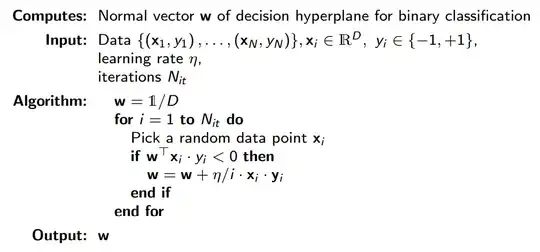

The way the loss function was introduced in the textbook seemed to imply that it was part of the architecture of the perceptron / artificial neuron, but, according to hanugm's answer, it is external and instead used to update the weights of the neuron. So it seems that I misunderstood what was written in the textbook.

In my question above, I pretty much assumed that the loss function was part of the architecture of the perceptron / artificial neuron, and then asked how it fit into the architecture, since I couldn't see any indication of it in the figure.

Is the loss / loss function part of the architecture of a perceptron / artificial neuron? I cannot see any indication of a loss / loss function in figure 1.7, so I'm confused about this. If not, then how does the loss / loss function relate to the architecture of the perceptron / artificial neuron?