The quick answer: yes you can, just add images without labels, just make sure that in the negative samples there are no cars or you will make the AI crazy (i.e. convergence & instability issues).

However that might not be the better approach to go. Why? Because your dataset already have enough negative examples. This was pointed out by the famous paper Focal Loss for Dense Object Detection. The paper basically proposes to think that each pixel of a dataset image is a training signal. Then for each image there are lots of pixels with negative signal (nothing in it: sky, ground, trees...) and only a few with positive signal (the actual car).

So if each image of the dataset have more negative signals (pixels) than positive, then, the problem might not be in the negative examples. So that leaves you with 2 ways to go:

- Use a loss function that focus more on positive signal (car pixels) than in the negative examples (not car pixels) such focal loss or derivatives

- Add more positive examples in the dataset

I can confirm what this paper stated in my every day experiments. We have a battery of experiments right now that performs best with focal loss & no negative examples VS other experiments without focal loss & negative examples.

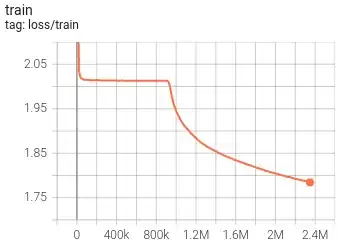

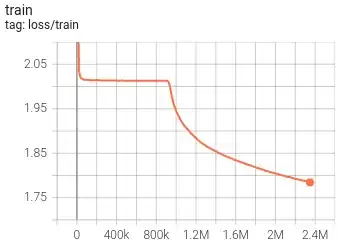

Just for reference this is what happens when there are lots of negative examples:

The AI took a while to figure out that negative samples are not useful (1M steps) in this experiment. From them on it just focused on the positive samples and this training started to converge (and inference started to show something meaningful)