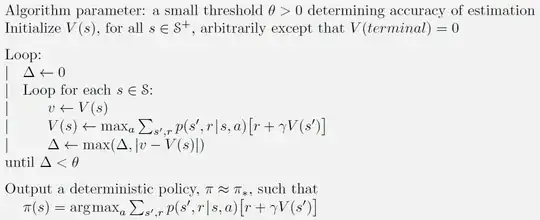

My understanding is that you want to extend the main update loop like this:

$\qquad V(s) \leftarrow \text{max}_a \sum_{r,s'} p(r,s'|s,a)[r+\gamma V(s')]$

$\qquad \pi(s) \leftarrow \text{argmax}_a \sum_{r,s'} p(r,s'|s,a)[r+\gamma V(s')]$

Where the first line is same as original, the second line is taken from the end update that calculates the optimal policy.

Once our $\Delta < \theta$ and we break out of the loop, do we have an optimal policy from the family of optimal policies? Is this a valid thing to do?

Yes.

The only problem with this approach is the extra wasted processing - you will evaluate and overwrite $\pi$ once per loop. Doing it once at the end, after convergence, is more efficient.

The policies obtained after using standard VI and the method I described above are mostly similar, yet different for some states.

There are lots of equivalent optimal policies in the gambler's problem exercise in the book. This is expected. If you re-run the normal value iteration routine you should see similar changes to the policy.

The thing the policies have in common is a kind of fractal sawtooth shape for amounts bid, with triangles trying to get funds to fixed points and spikes where half or all funds are bid in single go to reach 100 funds. if you plot bid amount versus current funds. It might be one large triangle, or broken down into multiple smaller ones with larger bid spikes separating them. That is the expected shape, unless probability of a win is greater than 0.5, in which case the optimal policy is to bet a minimal amount on each time step and rely on the law of averages to eventually reach the target almost guaranteed. So you could set it to 0.6, you should see both your scripts return this same "safe" policy.