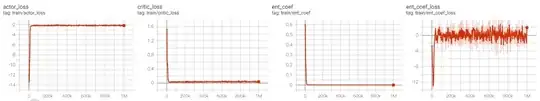

I am using stable-baseline3 implementation of the Soft-Actor-Critic (SAC) algorithm. The plotted training curves look promising. However, I am not fully sure how to interpret the actor and critic losses. The entropy coefficient $\alpha$ is automatically learned during training. As the entropy decreases, the critic loss and actor loss decrease as well.

- How does the entropy coefficient affect the losses?

- Can this be interpreted as the estimations becoming more accurate as the focus is shifted from exploration to exploitation?

- How can negative actor losses be interpreted, what do actor losses tell in general?

Thanks a lot in advance