I am using TD3 on a custom gym environment, but the problem is that the action values stick to the end. Sticking to the end values makes reward negative, to be positive it must find action values somewhere in the mid. But, the agent doesn't learn that and keeps action values to maximum.

I am using one step termination environment (environment needs actions once for each episode).

How can I improve my model? I want action values to be roughly within 80% of maximum values.

In DDPG, we have inverted gradients, but could something similar be applied to TD3 to make action values search within legal action space more?

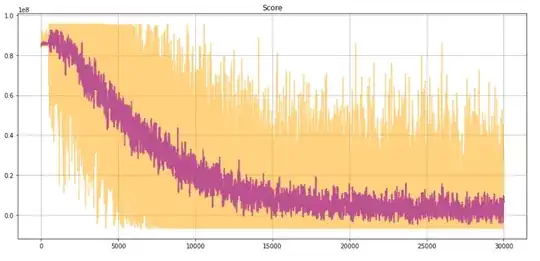

The score decreases as episodes increases.