Having been studying computer vision for a while, I still cannot understand what the difference between a transformer and attention is?

1 Answers

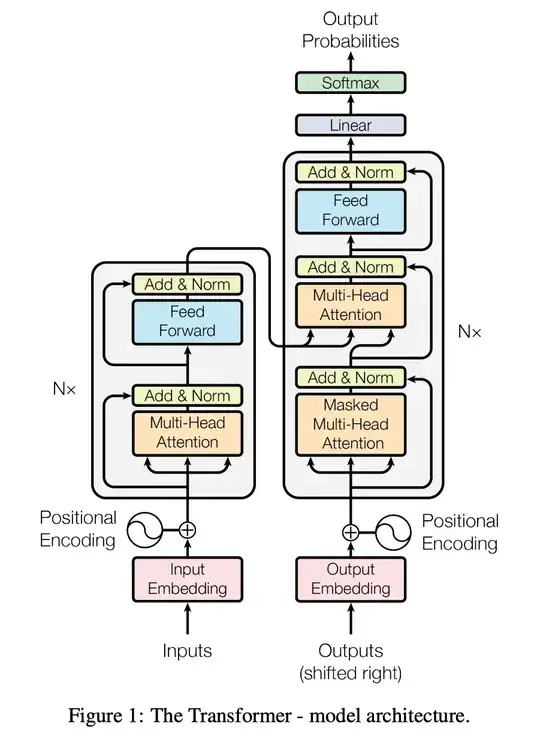

The original transformer is a feedforward neural network (FFNN)-based architecture that makes use of an attention mechanism. So, this is the difference: an attention mechanism (in particular, a self-attention operation) is used by the transformer, which is not just this attention mechanism, but it's an encoder-decoder architecture, which makes use of other techniques too: for example, positional encoding and layer normalization. In other words, the transformer is the model, while the attention is a technique used by the model.

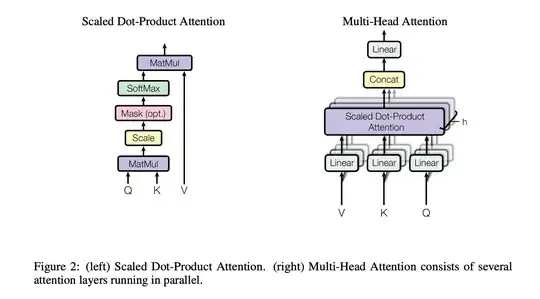

The paper that introduced the transformer Attention Is All You Need (2017, NIPS) contains a diagram of the transformer and the attention block (i.e. the part of the transformer that does this attention operation).

Here's the diagram of the transformer.

Here's the picture of the attention mechanism (as you can see from the diagram above, the transformer used the multi-head attention on the right).

One thing to keep in mind is that the idea of attention is not novel to the transformer, given that similar ideas had already been used in previous works and models, for example, here, although the specific attention mechanisms are different.

Of course, you should read the mentioned paper for more details about the transformer, the attention mechanism and the diagrams above.

- 39,006

- 12

- 98

- 176