The research paper titled Improved Training of Wasserstein GANs proposed a gradient penalty in order to avoid undesired behavior due to weight clipping of the discriminator.

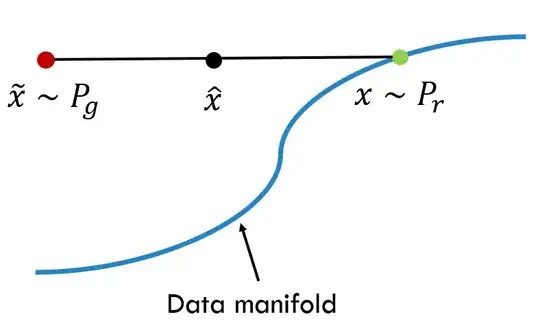

We now propose an alternative way to enforce the Lipschitz constraint. A differentiable function is 1-Lipschtiz if and only if it has gradients with norm at most 1 everywhere, so we consider directly constraining the gradient norm of the critic’s output with respect to its input. To circumvent tractability issues, we enforce a soft version of the constraint with a penalty on the gradient norm for random samples $\hat{x} \sim P_\hat{x}$. Our new objective is

L=E˜x∼Pg[D(˜x)]−Ex∼Pr[D(x)]+Eˆx∼Pˆx[(‖▽ˆxD(ˆx)‖2−1)2]

The last term in the discriminator's loss function is related to the gradient penalty. It is easy to calculate the first two terms. Since discriminator, in general, gives value in range $[0, 1]$, the first two terms are just the average of the sequence of probability values given by discriminator on generated and real images respectively.

But, how to calculate $\triangledown_{\hat{x}} D(\hat{x})$ for a given image $\hat{x}$?