Loss functions are useful in calculating loss and then we can update the weights of a neural network. The loss function is thus useful in training neural networks.

Consider the following excerpt from this answer

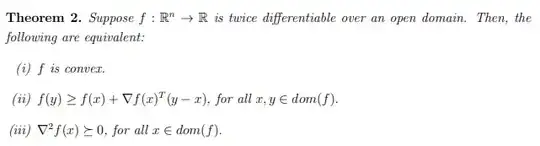

In principle, differentiability is sufficient to run gradient descent. That said, unless $L$ is convex, gradient descent offers no guarantees of convergence to a global minimiser. In practice, neural network loss functions are rarely convex anyway.

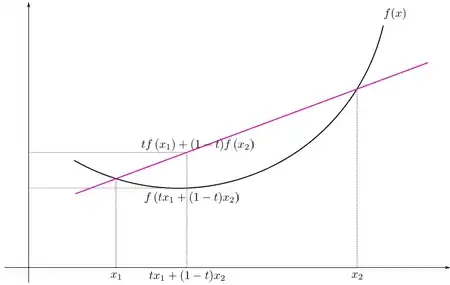

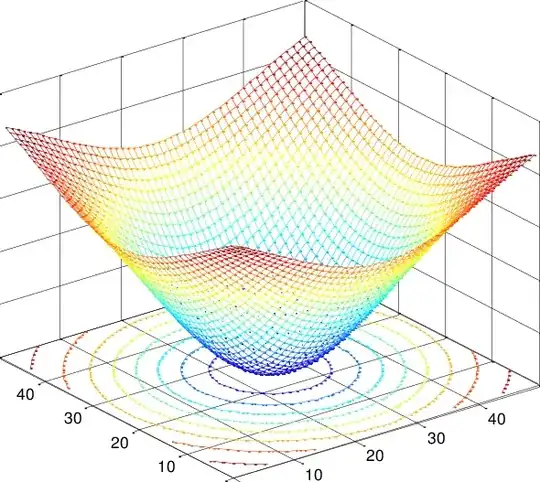

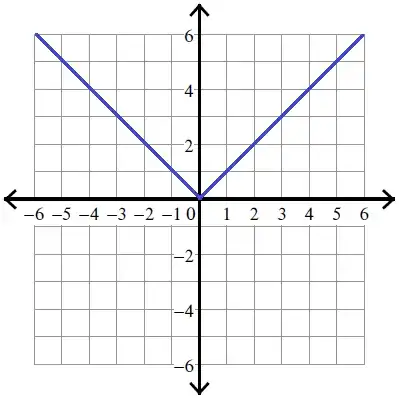

It implies that the convexity property of loss functions is useful in ensuring the convergence, if we are using the gradient descent algorithm. There is another narrowed version of this question dealing with cross-entropy loss. But, this question is, in fact, a general question and is not restricted to a particular loss function.

How to know whether a loss function is convex or not? Is there any algorithm to check it?