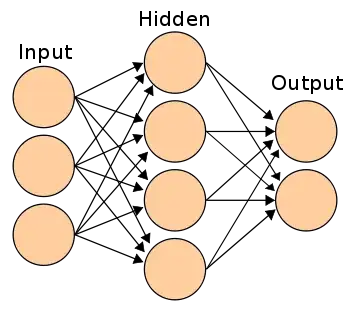

Suppose the following is the neural network I want to train and assume that there is a batch normalization layer for each layer of the neural network

My focus is on the activity of batch normalization layer of the hidden layer. Assume that mini-batch size is $3$. The following are the first four outputs of the hidden layer without using batch normalization layer.

$$\left(\begin{array}{c} 4 \\ 5 \\ 2 \\ 1 \\ \end{array}\right), \left(\begin{array}{c} 3 \\ 4 \\ 6 \\ 0 \\ \end{array}\right), \left(\begin{array}{c} 1 \\ 4 \\ 7 \\ 9 \\ \end{array}\right), \left(\begin{array}{c} 3 \\ 5 \\ 7 \\ 9 \\ \end{array}\right)$$

So the first mini-batch is$\left(\begin{array}{c} 4 \\ 5 \\ 2 \\ 1 \\ \end{array}\right), \left(\begin{array}{c} 3 \\ 4 \\ 6 \\ 0 \\ \end{array}\right), \left(\begin{array}{c} 1 \\ 4 \\ 7 \\ 9 \\ \end{array}\right)$ and has the following statistics

mean = $\mathbb{E}[X] = \left(\begin{array}{c} \dfrac{8}{3} \\ \dfrac{13}{3} \\ 5 \\ \dfrac{10}{3} \\ \end{array}\right), Var(X) = \left(\begin{array}{c} \dfrac{14}{9} \\ \dfrac{2}{9} \\ \dfrac{14}{3} \\ \dfrac{146}{9} \\ \end{array}\right)$

So, I am thinking that the batch normalization can be applied during from fourth iteration since mini-batch of outputs of hidden layer are available and so fourth vector $\left(\begin{array}{c} 3 \\ 5 \\ 7 \\ 9 \\ \end{array}\right)$ will be mapped to $\left(\begin{array}{c} 0.26 \\ 1.41 \\ 0.92 \\ 1.4 \\ \end{array}\right)$

Is it true that if we apply batch normalization the outputs of neural network are $$\left(\begin{array}{c} 4 \\ 5 \\ 2 \\ 1 \\ \end{array}\right), \left(\begin{array}{c} 3 \\ 4 \\ 6 \\ 0 \\ \end{array}\right), \left(\begin{array}{c} 1 \\ 4 \\ 7 \\ 9 \\ \end{array}\right),\left(\begin{array}{c} 0.26 \\ 1.41 \\ 0.92 \\ 1.4 \\ \end{array}\right)$$

I am thinking that I may be totally wrong because the batch normalization layer is not active till the completion of first mini-batch and also using the stats of first mini batch on the outputs of next mini-batch. If wrong, I want to know what is wrong or a simple numerical example by at-least running the batch normalization layer of neural network for mini-batch + 1 number of iterations.