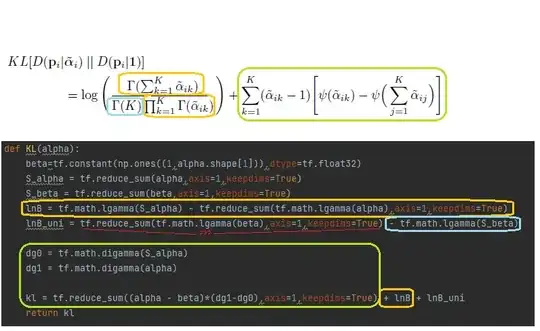

I am trying to understand a certain KL-divergence formula (which can be found on page 6 of the paper Evidential Deep Learning to Quantify Classification Uncertainty) and found a TensorFlow implementation for it. I understand most parts of the formula and put colored frames around them. Unfortunately, there is one term in the implementation (underlined red) that I can't tell how it fits in the formula.

Is this a mistake in the implementation? I don't understand how the red part is necessary.