Consider the following statements from Chapter 5: Machine Learning Basics from the book titled Deep Learning (by Aaron Courville et al.)

Machine learning tasks are usually described in terms of how the machine learning system should process an example. An example is a collection of features that have been quantitatively measured from some object or event that we want the machine learning system to process. We typically represent an example as a vector $\mathbf{x} \in \mathbb{R}^n$ where each entry $x_i$ of the vector is another feature. For example, the features of an image are usually the values of the pixels in the image.

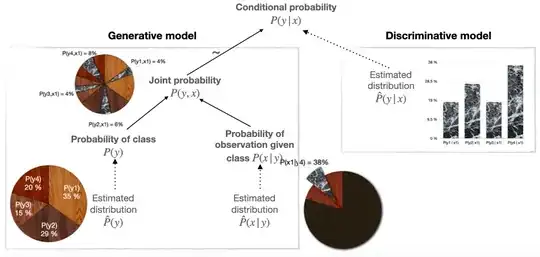

Here, an example is described as a collection of features, which are real numbers. In probability theory, a random variable is also a real-valued function.

Can I always interpret features in machine learning as random variables or are there any exceptions for this interpretation?