In this paper, in section 3.1, the authors state

Scaling the filter instead of the image allows the generation of saliency maps of the same size and resolution as the input image.

How is this possible?

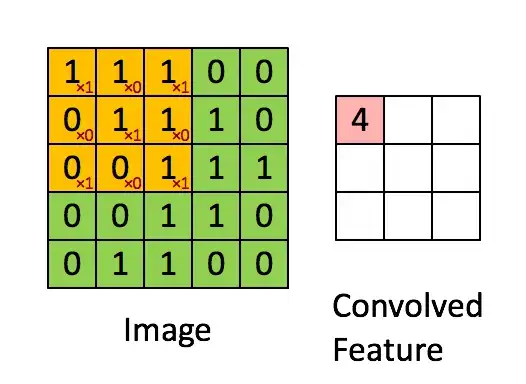

From what I have understood, the process of filtering the image is similar to that of a convolution operation, like this:

However, if this is true, shouldn't we get different sized outputs (i.e. saliency maps) for different filter sizes?

I think I am misunderstanding how the filtering process really works in that it actually differs from a CNN. I would highly appreciate any insight on the above.

Note: This is a follow-up to this question.