As far as I know,

FaceNet requires a square image as an input.

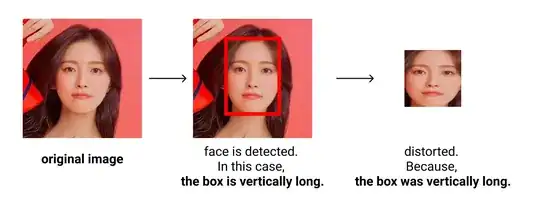

MTCNN can detect and crop the original image as a square, but distortion occurs.

Is it okay to feed the converted (now square) distorted image into FaceNet? Does it affect the accuracy of calculation similarity (embedding)?

For similarity (classification of known faces), I am going to put some custom layers upon FaceNet.

(If it's okay, maybe because every other image would be distorted no matter what? So, it would not compare normal image vs distorted image, but distorted image vs distorted image, which would be fair?)

Original issue: https://github.com/timesler/facenet-pytorch/issues/181.