I want to calculate an upper bound on how many training points an MLP regressor can fit with ~0 error. I don't care about the test error, I want to overfit as much as possible the (few) training points.

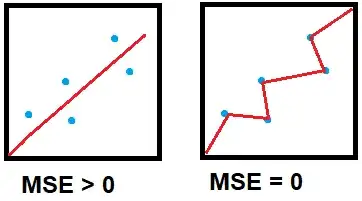

For example, for linear regression, it's impossible to achieve 0 MSE if the points don't lie on a line. An MLP, however, can overfit and include these points in the prediction.

So, my question is: given an MLP and its parameter, how can I calculate an upper bound on how many points it can exactly fit?

I was thinking to use the VC dimension to estimate this upper bound.

The VCdim is a metric for binary classification models but a pseudo dimension can be adapted to real-value regression models by thresholding the output:

$$Pdim(\mathcal{G}) = {VCdim}(\{(x,t) \mapsto 1_{g(x)-t>0}:g \in \mathcal{G}\})$$ (from the book Foundation of Machine Learning, 2nd edition, definition 11.5)

where $\mathcal{G}$ is the concept class of the regressor, $g(x)$ is the regressor out and $t$ is a threshold.

The model is an MLP with RELU activations. As far as I understood on Wikipedia, it should have a VCdim equal to the number of the weights (correct me if I'm wrong).

So, the question is: how to practically calculate the pseudo-dim for the regressor given the VCdim? Does it make sense for the purpose that I want to achieve?