ImageNet dataset is an established benchmark for the measurement of the performance of CV models.

ImageNet involves 1000 categories and the goal of the classification model is to output the correct label given the image.

Researchers compete with each other to improve the current SOTA on this dataset, and the current state of the art is 90.88% top-1 accuracy.

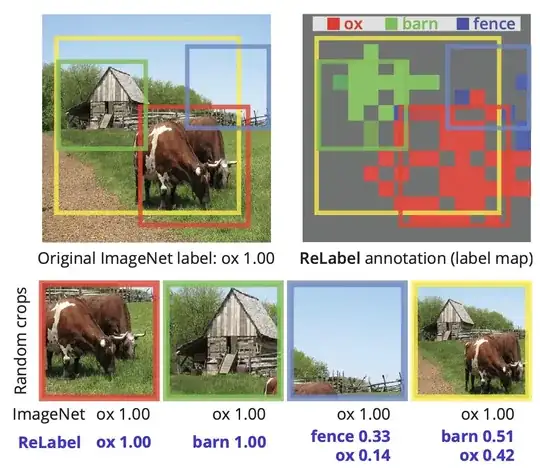

If the images involved only a single object and background - this problem would be well-posed (at least from our perceptual point of view). However, many images in the dataset involve multiple objects - a group of people, a person, and an animal - the task of classification becomes ambiguous.

Here are some examples.

The true class for this image is bicycle. However, there is a group of people. The model that recognizes these people would be right from the human point of view, but the label would be wrong.

Another example is the fisherman with fish called tench.

The model could have recognized the person, but be wrong.

So, my question is - how does much the performance of the best models of ImageNet reflect their ability to capture complicated and diverse image distribution, and how much the final result on the validation set is accidental. When there are multiple objects present on the image, the network can predict any of them. Prediction can match the ground truth class or can differ. And for the model, that happens to be luckier, this benchmark will show better performance. The actual quality can be the same, in fact.