I'd like to design a deep learning architecture in which the output of a primary neural network $M_{\theta}$ determines which neural network $N^i_{\alpha}$ in a set of secondary networks $\mathcal{N}$ to use next. For example, $M_{\theta}$ could be a multiclass classifier, where the predicted class determines $N^i_{\alpha}$. The networks may have different dimensions and activation functions. Is there a name for this type of architecture?

Asked

Active

Viewed 81 times

0

1 Answers

1

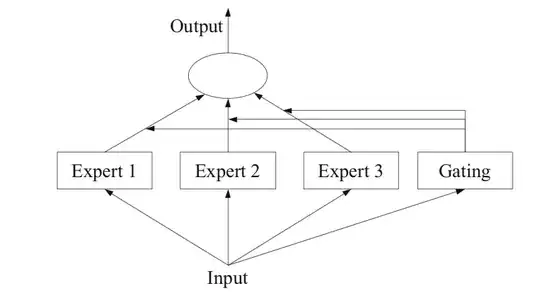

Mixture of Experts might be what you are looking for.

A Mixture of Experts model (MoE), divides a task into subtasks and designs seperate models for each of the tasks (This would be N in your case). It also defines a gating model to decide which expert to use, and during inference it uses the gating model output to pool/select predictions and makes the final decision.

DKDK

- 319

- 1

- 5

-

Can you please provide more details about these "Mixture of Experts"? On our site, we expect users to provide more details than just a name and a link to an external resource. If you don't feel like providing more details, I can convert this answer to a comment. – nbro Jan 05 '22 at 09:33

-

Sorry for the late response, I was busy last night and completely forgot about this question. I added some more details as you said. – DKDK Jan 06 '22 at 01:25

-

I am not sure if "mixture of experts" is a widely used term. Maybe you mean "ensemble learning"? – nbro Jan 06 '22 at 09:02

-

I think mixture of experts is more suitable for the op's question. In Ensemble Learning, the seperate models doesn't necessarily have divided problem spaces. – DKDK Jan 06 '22 at 09:18

-

Thanks. This is what I was seeking. The main drawback here is that each of the expert networks is evaluated for each input. In my architecture, these networks could be arbitrarily complex, and we may only want to use one expert for a given input. Anyway, good suggestion. > I am not sure if "mixture of experts" is a widely used term. The term is used widely in ML literature. Mixture of experts is a type of ensemble learning. – Wowee Jan 09 '22 at 23:52