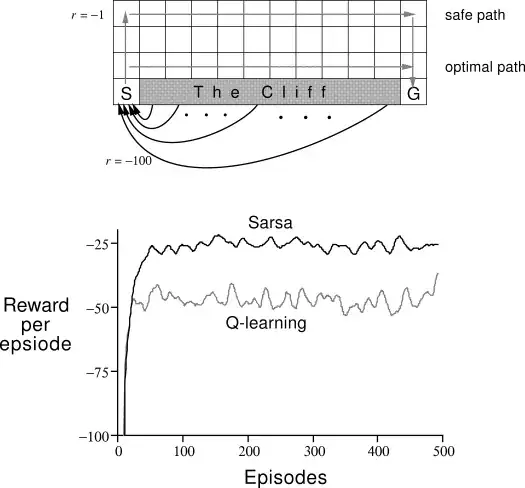

It is important to note that the graph shows reward received during training. This includes rewards due to exploratory moves, which sometimes involve the agent falling off the cliff, even if it has already established that will lead to a large penalty. Q-learning does this more often than SARSA because Q learning targets learning values of the optimal greedy policy, whilst SARSA targets learning the values of the approximately optimal $\epsilon$-greedy policy. The cliff walking setup is designed to make these policies different.

The graph shows that during training, SARSA performs better at the task than Q learning. This may be an important consideration if mistakes during training have real expense (e.g. someone has to keep picking the agent robot off the floor whenever it falls off the cliff).

If you stopped training after episode 500 (assuming both agents had converged to accurate enough action value tables at that point), and ran both agents with the greedy policy based on their action values, then Q learning would score -13 per episode, and SARSA would be worse at -17 per episode. Both would perform better than during training, but Q learning would have the best trained policy.

To make SARSA and Q-learning equivalent in the long term, you would need to decay the exploration policy parameter $\epsilon$. If you did this during the training process, at a slow enough rate, ending with no exploration, then two approaches would converge to the same optimal policy and same reward per episode (of -13).