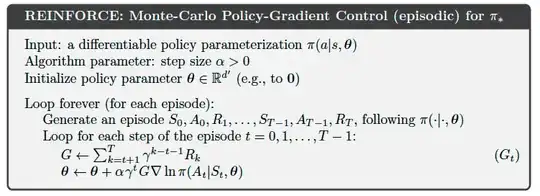

Consider the following algorithm from the textbook titled Reinforcement Learning: An Introduction (second edition) by Richard S. Sutton and Andrew G. Bart

While playing the game for the generation of an episode trajectory, how is the action selected by the agent? I mean, how does the agent selection action $A_i$ from state $S_i$ for $0 \le i \le T-1$. I am getting this doubt as the policy is stochastic in nature and doesn't give a single action as output.

But the algorithm says to generate the episode following the stochastic policy function.

Is it always the action that has the high probability in $\pi(a|s,\theta)$?

Note: The trajectory here is $(S_0,A_0,R_1 \cdots S_{T-1},A_{T-1},R_{T})$,