A few papers I have come across say that BLEU is not an appropriate evaluation metric for chatbots, so they use the perplexity.

First of all, what is perplexity? How to calculate it? And why is perplexity a good evaluation metric for chatbots?

A few papers I have come across say that BLEU is not an appropriate evaluation metric for chatbots, so they use the perplexity.

First of all, what is perplexity? How to calculate it? And why is perplexity a good evaluation metric for chatbots?

With perplexity you are trying to evaluate the similarity between the token (in your case probably sentences) distribution generated by the model and the one in the test data.

For instance, assuming you have $M$ sentences $s_1, \dots, s_M$, each with probability $P(s_i)$, the perplexity is $$2^{-l},$$ where $l = \frac{1}{M} \sum P(s_i) \log P(s_i)$ for $i \in [1 \dots M]$.

Note that while perplexity might be useful to capturing certain aspects of the model, it is by no means perfect, and, even if you are able to reach great perplexity scores, it will not necessarily translate to a good or even working chat bot.

For the definition and calculation of perplexity, please refer to this answer.

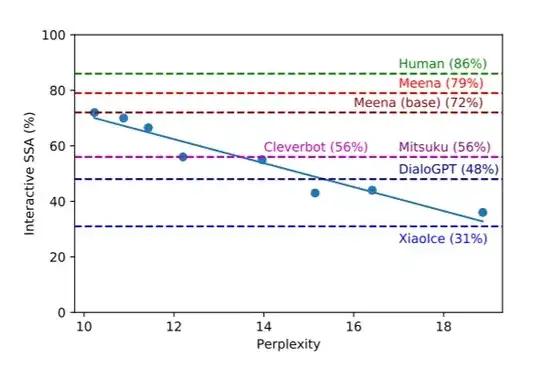

Google proposed a human evaluation metric called Sensibleness and Specificity Average (SSA) which combines two fundamental aspects of a humanlike chatbot: making sense and being specific. And they conducted some experiments and found that perplexity aligns very well with the SSA.

Here is the explanation in the paper:

Perplexity measures how well the model predicts the test set data; in other words, how accurately it anticipates what people will say next.

Our results indicate most of the variance in the human metrics can be explained by the test perplexity.

Their experiments showed a very strong correlation between SSA and perplexity(the lower the perplexity the higher the SSA).

References: