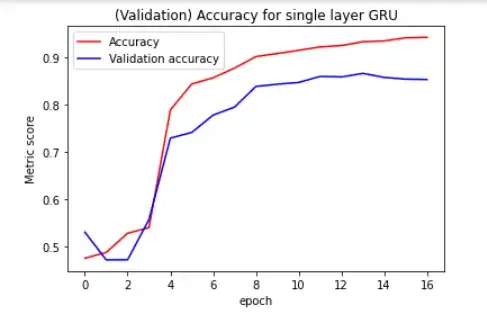

I have implemented a GRU to deal with youtube comment data. I am a bit confused about why the validation score seems to even out around 70% and then keeps rising, this doesn't look like overfitting from what I'm used to since it keeps rising. Is this normal? Does anyone know what's happening here?

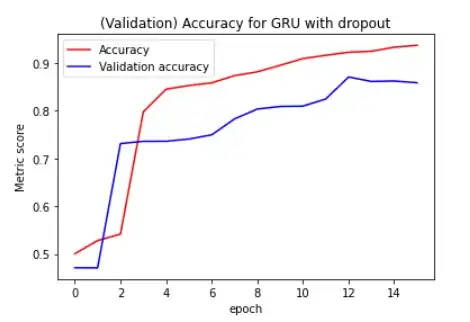

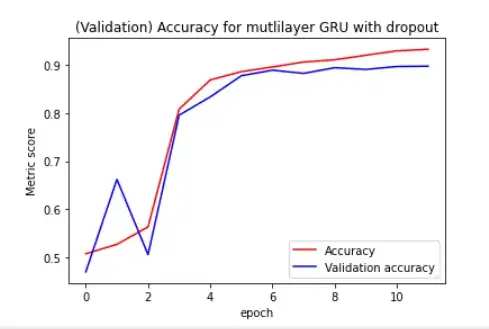

I've implemented the GRU as a simple GRU, then GRU with dropout and then a multilayer (2 layered) GRU with dropout. The graphs are shown below.

As you can see, in the multilayer GRU with dropout , the validation accuracy does nicely follow the training accuracy. Does this simply mean that the other models are simply not capable of capturing certain information? Is there a way to improve these results, what parameters should be optimized?