I am currently trying to teach a (D)DQN algorithm to play a 10x10 GridWorld game, so I can compare the two as I increase the number of moves the agent can take.

The rewards are as follows: A step = -1 key = 100 Door = 100 Death wall = -100

See the setup of the AI in the code.

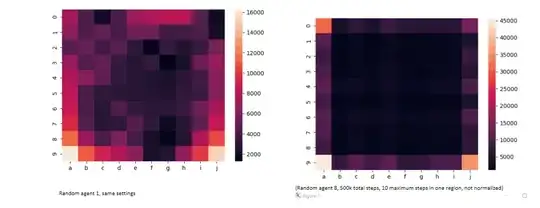

My problem is, that regardless of what I do, it ends up following the same strategy of just going to the outer walls and staying there. I presume this is because, the outer walls givve the least amount of punishment per step, as the risk of dying is decreased considerably. At the same time, as the moves increase, the chance of ending up at the outer walls increases as well (see the heatmaps)

I've tried the following:

- Drastically decreasing the decay of the epsilon, such that it reaches its final state only in the last 10% of the training steps.

- Running 100k moves just to add to the EMR before I start actually counting the steps.

- Increasing the size of the network

- Giving out a negative -2 reward for staying in the same tile

- Feeding it the whole grid as the input vector

None of this has worked. The longest I have trained a single model was for 14 million training steps. Still, same strategy as before.

The way I evaluate the model is by(in this order):

- At every 1 millionth training step I run 50-100k evaluation steps where I record the outcome of every step

- Generating a heatmap to see whether or not it remains in the same few places (which are not the key or the door)

- Running its best policy and visually estimating whether or not it has improved

CODE: https://github.com/BrugerX/IISProject3Ugers

Training is done through the TrainingLoop.py and the evaluation is done through the HeatMapEvaluation.py.

What is it, that I have missed? How come, even after 14 million training steps, that the model still hasn't learnt to memorize the path in GridWorld?