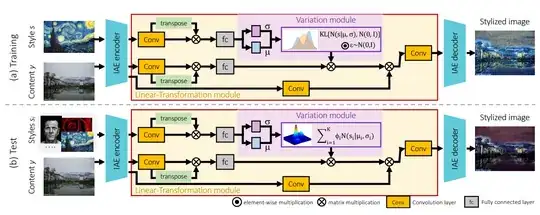

I have come across this research paper where a Variational Autoencoder is used to map multiple styles from reference images to a linear latent space and then transfer the style to another image like this:

What I don't understand from the paper is how does the Variation module learn the style of the reference images as opposed to the content?

And since VAEs are generative models, is it possible to generalise this to generate styles from the trained variation model by feeding it random noise instead of the encoded reference images?