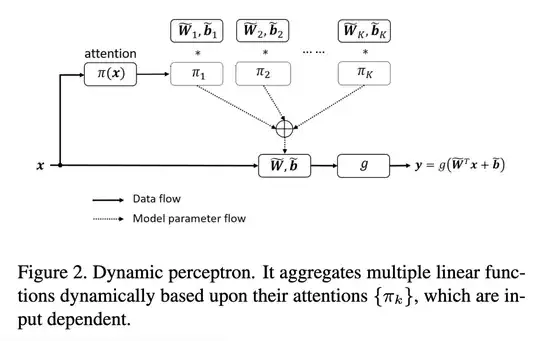

Are there any examples of people performing multiple convolutions at a single depth and then performing feature max aggregation as a convex combination as a form of "dynamic convolutions"?

To be more precise: Say you have an input x, and you generate

Y_1 = conv(x)

Y_2 = conv(x)

Y_3 = conv(x)

Y = torch.cat([Y_1,Y_2,Y_3])

Weights = nn.Parameter(torch.rand(1,3))

Weights_normalized = nn.softmax(weights)

Attended_features = torch.matmul(Y, weights_normalized.t())

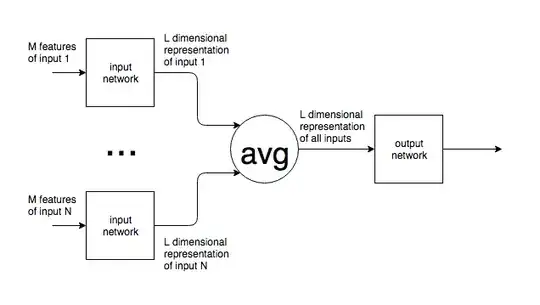

So, essentially, you are learning a weighting of the feature maps through this averaging procedure.

Some of you may be familiar with the "Dynamic Convolutions" paper. I’m just curious if you all would consider this dynamic convolution or attention of feature maps. Have you seen it before?

If the code isn’t clear, this is just taking an optimized linear combination of the convolution algorithm feature maps.