I am watching the lecture from Brown University (in udemy) and I am in the portion of Temporal Difference Learning.

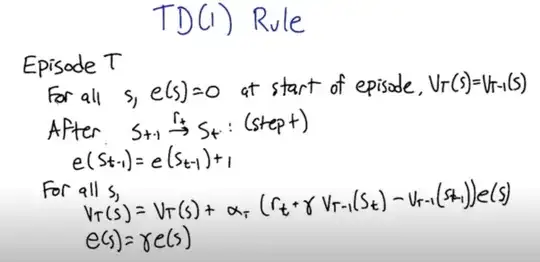

In the pseudocode/algorithm of TD(1) (seen in the screenshot below), we initialise the eligibility $e(s) =0$ for all states. Later on, we decay this eligibility by a factor $\gamma$.

My question is, what does 'eligibility' mean in an intuitive sense? Previous dynamic programming algorithms (value iteration and policy iteration) do not have this 'eligibility' concept. Why is it here in TD? Is it because we are effectively sampling episodes here (unlike in the previous when we test all states and all possible actions?)

Insights welcome.