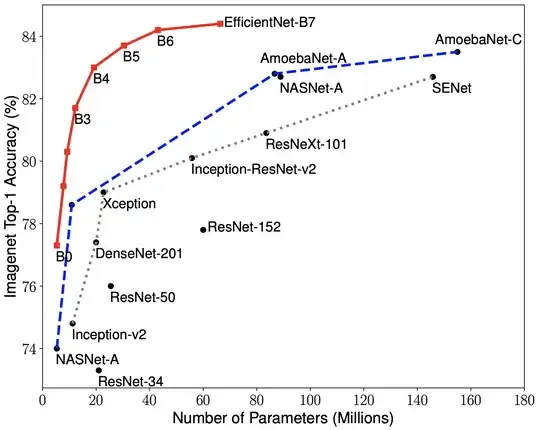

I have videos that are each about 30-40 mins long. With the first 5-10 mins (at 60fps, can be down-sampled to 5fps) are one type of activity that would be categorized by label-1 and the rest of the video as label-2. I started off by using CNN-LSTM to do this prediction (Resnet-50 + LSTM + FC-classifier).

Using Pytorch...

For training my initial approach was to treat this as an activity classification task. So, I split my videos into smaller segments with each segment having a label.

video1.mp4 (5 mins) --> label-1

Split into 30 seconds -->

video1_0001.mp4 --> label-1

.

.

.

video1_0010.mp4 --> label-1

But, with this strategy even after 100 epochs the network does not train. I can at the most fit about 40 frames on the 2-GPUs, but a 30 second segment of video @5fps has about 150 frames. Any further subsampling seems to not capture the essence of the video segment.

I also tried training without shuffling and with single thread, so a single stream is loaded continuously. But perhaps its not the right strategy.

I wanted to request some help on how to tackle the problem. I would really appreciate some insights into a training strategy for this problem.

- Is using CNN-LSTM the right strategy here?

=== Update ===

After reading a few other posts on similar topics,

I feel that to get the network to see a larger part of the sequence, I will have to use more GPUs or resize images. However, since the pretrained Resnet accepts 224x224, I will need more GPUs. But I am curious; is there another strategy? Because the question could also be about what is the ideal segment length that would enable the network to learn.

From my perception, a 30 second video sampled @5fps at the bare minimum captures the context. From observation so far, going below this number hasn't allowed the network to learn.