I am trying to label code snippets and I base on this article: https://arxiv.org/pdf/1906.01032.pdf

My dataset is just code snippets (tokenized as ascii characters) and 500 different labels from StackOverflow. Currently I have around 1,600,000 samples after filtering these with negative votes and less than 10 characters

This is my current implementation of network architecture in TensorFlow:

def build_cnn(config, hparams) -> tf.keras.Model:

params = config["params"]

inputs = tf.keras.layers.Input((1024, ))

x = tf.keras.layers.Embedding(input_dim=1024, output_dim=16)(inputs)

conv_outputs = []

for filters, kernel in [

(128, 2),

(192, 3),

(256, 4),

(512, 5)

]:

data = tf.keras.layers.Conv1D(filters=filters, kernel_size=kernel, padding="valid")(x)

data = tf.keras.layers.BatchNormalization()(data)

data = tf.keras.layers.Conv1D(filters=filters, kernel_size=kernel, padding="valid")(data)

data = tf.keras.layers.Lambda(lambda a: tf.reduce_sum(a, axis=1))(data)

conv_outputs.append(data)

# Concatenation: output size is sum of all convolution filters

concat_output = tf.keras.layers.Concatenate()(conv_outputs)

concat_output = tf.keras.layers.BatchNormalization()(concat_output)

concat_output = tf.keras.layers.Dense(480, activation="relu")(concat_output)

concat_output = tf.keras.layers.BatchNormalization()(concat_output)

concat_output = tf.keras.layers.Dense(480, activation="relu")(concat_output)

concat_output = tf.keras.layers.BatchNormalization()(concat_output)

outputs = tf.keras.layers.Dense(500, activation="sigmoid")(concat_output)

return tf.keras.Model(inputs=inputs, outputs=outputs)

As my metric I use F1Score from tensorflow_addons:

model.compile(

loss=tf.keras.losses.BinaryCrossentropy(),

optimizer=tf.keras.optimizers.Adam(learning_rate=0.001),

metrics=[

F1Score(num_classes=500, average="micro", threshold=0.5, name="f1_micro"),

F1Score(num_classes=500, average="macro", threshold=0.5, name="f1_macro"),

]

)

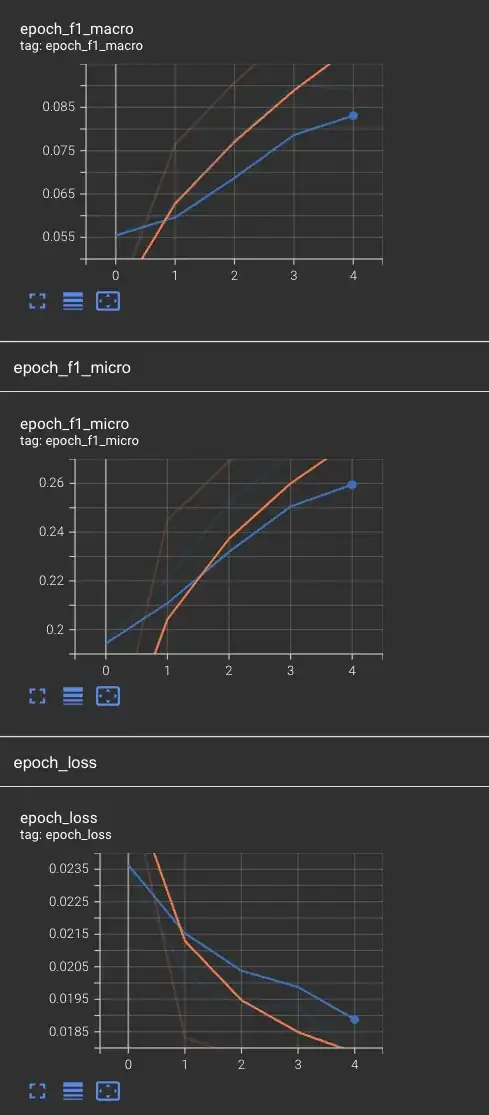

After 5 epochs results look like this (later it wasn't really improving):

My first idea was to apply class weights but it didn't help. Next idea was an experiment with only 10 tags (still multilabel) and this is the result:

{

"step": 12,

"loss": 0.2092941254377365,

"f1_micro": 0.6354409456253052,

"f1_macro": 0.5389766097068787,

"val_loss": 0.25258970260620117,

"val_f1_micro": 0.547073483467102,

"val_f1_macro": 0.4620901644229889

}

Last thing: after I saw some results with evaluation I saw that most of the outputs are empty or only 1 class, but using smaller threshold didn't increase the metric

Any idea how to improve the model for bigger number of tags? is it the metric?