I am learning Reinforcement learning for games following Gridworld examples. Apologies in advance if this is a basic question, very new to reinforcement learning.

I am slightly confused in scenarios where probability of moving up, down, left and right are not provided or stated. In this scenario, I assume we assume the optimal policy and therefore, you would apply the Bellman equation as:

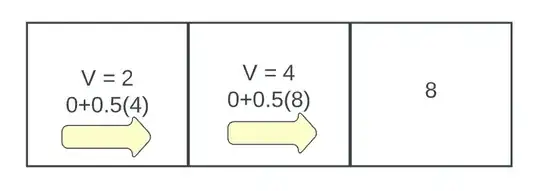

$V(s) = max_a(R(s,a)+\gamma V(s'))$

Cost for any movement is 0 and an agent can choose to terminate at a numbered grid to collect a reward amount of the grid number. This is why my square closest to the reward takes in the value 8 since it will terminate with the action to the next state to collect the reward.

Would this be the correct way to determine the value for the surrounding grid squares?