When training any seq2seq model you have a target and a source. The source may be a sentence such as:

I_walked_the_dog

And the target being

_walked_the_dogg

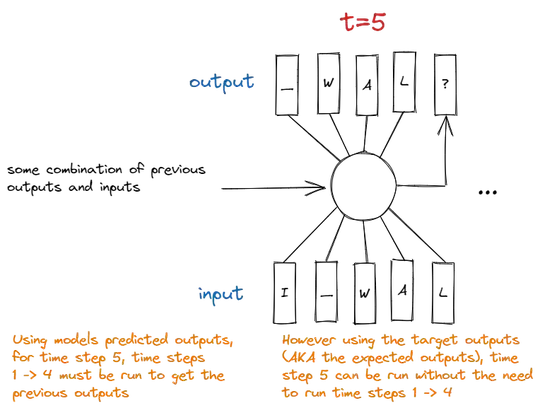

Where as you can see the expected output for the initial I is a space _. My question is, at training time, whether to use the models previous outputs for predicting the next output, or to run the training simultaneously using the expected outputs. To illustrate this more clearly, see below:

The incentive for training using the expected outputs is that all time steps can be trained simultaneously, so it speeds up training by a factor of the sequence lengths. However, it means that training is not representative of what the network will realistically be doing at testing time, as at testing time the network will not have perfect previous outputs, but rather only it's own.