Suppose I use a tansig activation function in the output layer of an artificial neural network giving me outputs in the range $[-1,1]$ and my model is applied to a binary classification problem, should my target labels be -1 and 1 or 0 and 1? I've always used 0 and 1, but now I'm questioning this.

-

1Why are you not using `sigmoid/softmax` for binary classification? – hanugm May 05 '22 at 08:23

-

Would you recommend this? Maybe I should do that. Are there any guidelines available in terms of suitable activation functions for the hidden layers too? – Rowan Barua May 05 '22 at 14:30

-

Yeah, In general, `relu` for hidden layers and `sigmoid` for the output layer. – hanugm May 05 '22 at 14:54

-

Amazing!! Thank you! Seems to have improved results massively by replacing tansig with sigmoid. Now I'll try replacing my tansig hidden layer functions with relu. – Rowan Barua May 05 '22 at 15:08

2 Answers

If you have already trained your model to predict either -1 or 1, and now your target variable is either 0 or 1, if -1 predicted by your model is the same as 0 in your target variable, then there are two ways you can do this:

- Use a postprocessing function, which maps the models output, -1 becomes 0, and 1 remains as it is

- Replace your target labels, 0 becomes -1, and 1 remains as it is

- 111

- 2

Yes, you should use an activation function that match the range of your ground truth labels, or the other way around, i.e. apply a normalization function to the labels to match your activation function.

If the range of model predictions and target differ in range, the model might still learn but the convergence will be slower, since you're basically allowing a broader range or loss values. With both predictions and labels within range [0, 1] for example, the difference between a prediction and its label will also be in the same range. If you allow predictions to be in range [-1, 1] then you could have errors larger than 1, for example $p=-1, t=1, |t-p|=2$.

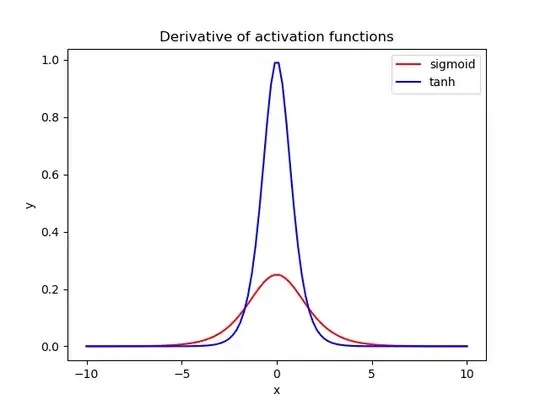

The decision about mapping the predictions to the targets range or the way around depends on the specific case you tackling. Sigmoid and tanh for example behave differently with respect to the gradients, the latter producing larger gradients, as you can see from the derivatives comparisons. So if you want larger gradients you might want to keep the tanh and map you targets to the range [-1,1]

- 5,153

- 1

- 11

- 25