After trying to understand what was happening by going through the algorithm on paper (below), I found all values were the same, so greedifying with respect to the value function often just had the agent going around in circles. Reducing the discount factor from 1 to anything below 1 gave me 100 percent success.

This is expected (and optimal, as defined) behaviour with a discount factor of 1 in the deterministic case. With a reward of 1 at the end, no discounting and and no negative rewards for taking its time, the agent has infinite time to complete the task. So whatever it does, provided it does not step into a hole at any point, is optimal.

The caveat is that when following the policy as a test, you need to use random choice to resolve ties (this is assumed in the theory behind value iteration, that the agent can reach the high rewards). Most simple argmax functions will not do that, so you will need to add some kind of shuffle or other random selection over the maximum results to ensure you are really following the policy that value iteration discovered the state value function for.

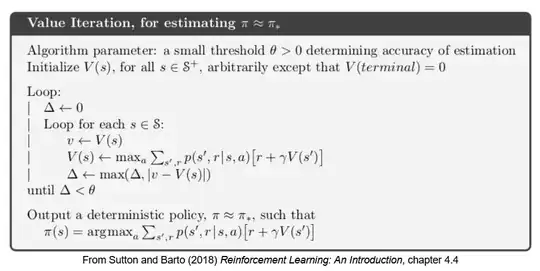

The last sentence in the pseudocode is ignoring the possibility that you have this kind of situation, so it is not strictly true in all cases. That is, you cannot reliably build a working deterministic policy directly from the optimal value function when $\gamma=1$ for environments like Frozen Lake.

But going to anything below 0.9 gave me worse results in the slippery case.

I am less sure about what is happening in this case. Given that you successfully found an optimal value function in the deterministic environment, then this may actually be optimal too. Whether or not it is may depend on the map - an optimal agent with low discount factor may prefer not to "run a gauntlet" early with risk of failure even if the overall probability of success is better. That may cause it to prefer a safer start that leads to a tougher end, where it is forced to take more risks overall than would be optimal for a goal of completing the most episodes.

how to interpret and choose the discount factor for any use case?

The discount factor is not a free choice for the agent, and it is not a hyperparameter for the learning method in value iteration. Instead, it is part of the definion for what is and isn't optimal. So you should select it based on the problem definition.

In an episodic environment where the agent's goal is to reach a certain state or states as fast as possible, there are two "natural" formulations:

Zero reward for most transitions, "goal" rewards for reaching certain terminal states, and a discount factor below $1$. I think your choice of $0.9$ would be fine, and it is commonly used in the literature for this kind of toy environment.

Negative reward for most transitions, "goal" rewards for reaching certain terminal states. If there is a single "target" state, such as exiting a maze, the "goal" reward can be zero. Usually no discounting, but you could add discounting if it represents something else about the problem.

In more complex systems, the discount factor may also become a hyperparameter of the agent. For instance, it does so when using function approximation with temporal difference learning, because it can dampen positive feedback from bootstrapping. In those cases, where "naturally" there would be no discounting, then you may want to use the highest value that prevents the estimator diverging e.g. $\gamma = 0.99$.