First of all, I think it is very hard to properly reason about these things, but there are a few points that might justify using sum instead of concatenation.

For example, concatenation would have the drawback of increasing the dimensionality. So for subsequent residual connections to work, you would either have to use the increased dimensionality throughout the model, or add yet another layer to transform it back to the original dimensionality.

The thing is, these embeddings carry different types of information, so intuitively adding them together doesn't really make sense. I mean, you cannot add 2 meters to 3 kilograms, but you can make a tuple

I would say that because the token-embedding is learned, you cannot really compare it to a fix unit like kilogram. Instead the embedding space of the token can be optimized to work with the positional encoding under summation.

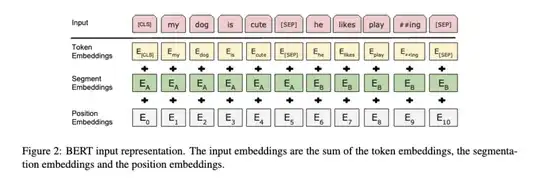

By adding them together, we are assuming the information about token, segmentation, and position can be simultaneously represented in the same embedding space, but that sounds like a bold claim.

The same applies here, the problem is not to embed them into the same space, but rather that subsequent layers can separate the position information from the token information.

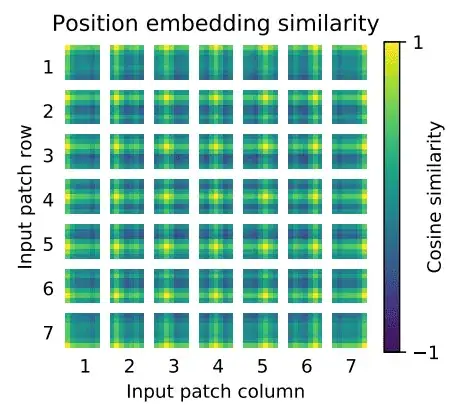

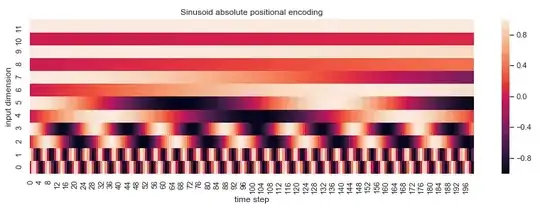

And I think this is possible for two reasons. Firstly, if you look at the visual representation of the positional embedding, the highest distortion by summation would happen in the first dimensions:

(Image taken from here)

(Image taken from here)

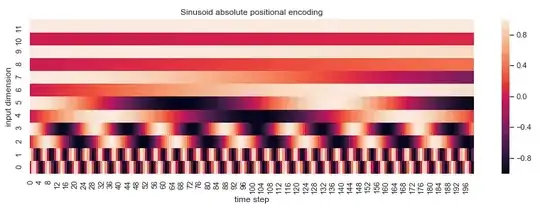

Therefore the token embedding could learn to encode high-frequency information only in the last dimensions to be less affected by the positional embedding.

I think another interesting statement in the Transformer paper is that the positional encoding behaves linearly w.r.t. relative position:

We chose this function because we hypothesized it would allow the model to easily learn to attend by relative positions, since for any fixed offset $k$, $PE_{pos+k}$ can be represented as a linear function of $PE_{pos}$.

[Source: Transformer Paper]

So this property shouldn't add additional non linearity to the token embedding, but instead acts more like a linear transformation, since any change in position changes the the embedding linearly. In my intuition this should also enable easy separation of positional vs token information.

This is my intuition so far, I am happy to hear your thoughts and additions

(Image taken from

(Image taken from