I am kind of new to the field of GANs and decided to develop a WGAN. All of the information online seems to be kind of contradicting itself. The more I read, the more I become confused, so I'm hoping y'all can clarify my misunderstanding with WGAN loss.

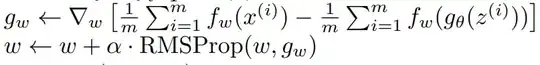

g_w are the parameters for the critic and g_θ are the parameters for the generator

From my understanding, the loss functions show that:

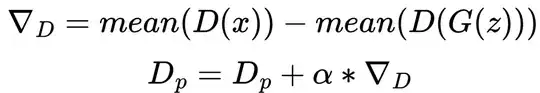

- The critic wants to minimize its loss. Splitting the loss function up, this means it wants to:

- minimize its score on real data

- maximize its score on fake data

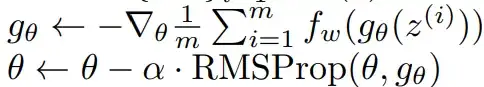

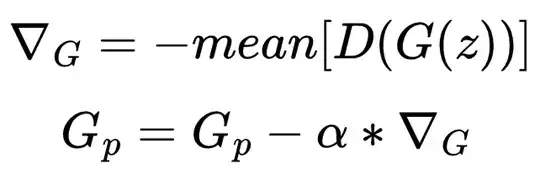

- The generator wants to maximize the critic score on fake data. So it wants to make the data it generates seem more fake to the critic?

Since the critic gives a high score to fake data and a low score to real data, why would the generator want to maximize its score? Wouldn't that mean the generator wants to make its data appear more "fake" to the critic? I would think the generator would want to minimize its loss to make it look more real (since real data has a low score)