ETA: More concise wording: Why do some implementations use batches of data taken from within the same sequence? Does this not make the cell state useless?

Using the example of an LSTM, it has a hidden state and cell state. These states are updated as new inputs are passed to an LSTM. The problem here, is if you use batches of data taken from the same timeframe, the hidden state and cell state can't be computed on previous values.

This is a major problem. The main advantage of an LSTM is this exact mechanic

For an example of what I mean, take the following. A simple LSTM used to predict a sine wave. At training time, I can't think of any way you could use batches. As you have one timeseries here that you are predicting, you have to start at time step 0 in order to properly compute and train the hidden state and cell state.

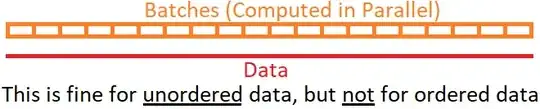

Taking batches like in the image below and computing them in parallel will mean the hidden and cell state can't be computed properly.

And yet, in the example I gave the batch_size is set to 10? This makes no sense to me. It also doesn't help tensorflow syntax isn't exactly the most verbose...

The only use case for batches in an LSTM I can see is if you have multiple totally independent sets of timeseries that can all be computed from timestep 0 in parallel with each having it's own cell and hidden state

My implementation

I actually duplicated the example LSTM from above but used Pytorch instead. My code can be found in a Kaggle notebook here, but as you can see, I've commented out the LSTM from the model and replaced it with a fc layer which performs just as well as the LSTM, because like I said, while using batches in this way it makes the LSTM utterly redundant.